[1]Navigating the AI Era: Gleaning Insights from the Cloud Revolution

Technical progress in software tends to follow Schumpeter’s creative destruction: new winners emerge with new era. This is valid for several platform shifts, including those from mainframes to PCs, desktops to mobile devices, unreliable networks to the internet, and on-premises to cloud and SaaS. This dynamic is finally beginning to manifest in AI, as we are finally on the verge of a meaningful platform shift, one that will allow for even greater value creation for AI companies after decades of AI winters — when companies struggled to put the AI in the hands of end consumers. Although generative AI or LLM(Large Language Model) - driven novel use cases have received a lot of attention, there is still speculation on its promising applications as most of the ongoing tooling is just around creative work — based on text, audio, and video generation which incumbents can easily add as a layer on their existing products. However, value creation will go beyond generative AI to include non-generative use cases such as data analysis, predictive modeling, and computer vision models.

As AI brings about a new era in computing, who will win the race to capture value?

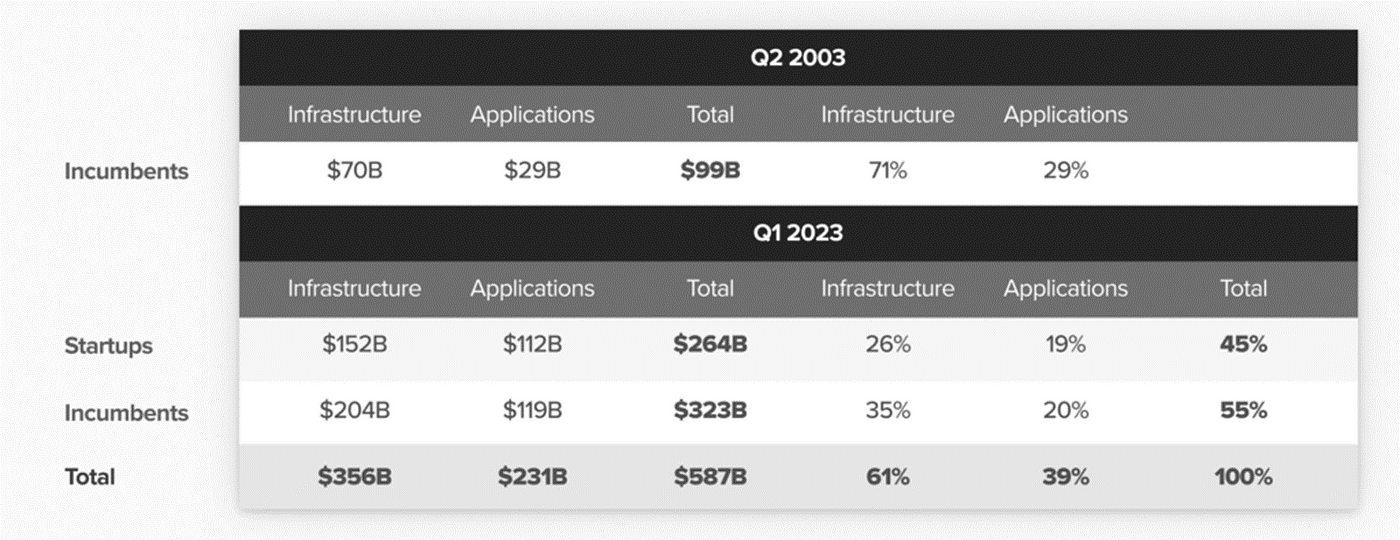

A study conducted by a16z analyzed the most recent shift in platforms, moving from on-premises to SaaS and cloud. They examined a sample of 242 public B2B SaaS companies over the past two decades to gain insights into the competitive landscape between startups and incumbents. The study also determined the value capture up and down the software stack, ranging from infrastructure to applications.

Platform shifts are generally positive shift games

Since Nasdaq’s low in 2003 Q2, the total revenue of public B2B software firms surged from $99B to $587B, a ~5.9x increase, with incumbents holding a 55% market share despite a 45% loss to new entrants. Over the next two decades, public B2B software sales will increase to $3T+ if the current “business as usual” 5.9x rate of software expansion holds. While collaboration software like Slack has benefitted from the network effects made possible by SaaS. However, the most successful applications have been cloud-based versions of familiar on-prem products: Salesforce competing versus Siebel in CRM, Workday rivaling PeopleSoft in HRIS, ServiceNow against BMC in ITSM, NetSuite challenging SAP in ERP, and Figma versus Adobe in design. AI goes beyond just being a business model or a software delivery innovation. It’s a new approach to accessing, synthesizing, and enhancing humanity’s collective knowledge and economic output. AI has the potential to generate value akin to the internet by opening entirely new ways of doing things, not just better ways of doing old things.

Market dynamics: Applications vs. Infrastructure

In 2003, the Infra/Cloud mix started at 70/30, and in 2 decades in 2023, it shifted to 60/40. During this time startups could capture roughly 50% of application dollars but only 40% of the infra dollars.

As applications are generally less defensible and easier to innovate with the advancement in developer tools and ready-to-use core infrastructure. Convincing customers to replace their core infrastructure is challenging, as it leads to long transition periods and more adoption lead time. Switching cost therefore increases for the infrastructure in turn increasing stickiness around existing infrastructure.

Is the Pace of Adaptation Quicker for Incumbents in the AI Era?

With the pace of evolution of the infrastructure and application layer, we have already started seeing the adoption rate to be much faster in the AI era. SaaS era lacked right talent to transition to cloud, right product development practices, and an organizational mindset. Some of the quick launches include Bloomberg GPT by Bloomberg, Fireflies by Adobe, and Einstein GPT by Salesforce.

But AI comes with its own challenges as the new era of computing is still evolving and many startups still iterating with the LLM models or in some cases with multiple models to get their use case to production. For example, for AI as an independent support agent powered by LLM models to respond in real-time as a customer support agent, an AI agent should be able to understand different accents and dialects to respond in real time to customer queries. Open source tools are good at Text to Speech conversions but the real-time interactions stack is proprietary to startups building in their respective space.

With the platform shift, comes a new infrastructure layer

While we figure out the more prominent applications other than (Text), content writing for the marketing teams, extracting data from documents, summarizing internal HR policies for employees, using chatbots to power customer support, and semantic search over traditional keyword-based search; the infrastructure layer is evolving as companies grow their data science capabilities, it’s critical for organizations to support their data science teams with the right tooling.

Infrastructure layer also gives better comfort for investors and India is not far behind West in building infrastructure needed for generative AI. Though foundational models and Hardware Side(GPUs/TPUs) of AI are dominated by mega-cap tech companies with only one IndicBERT multilingual ALBERT model built in India. There is an open space to build tooling around the LLM stack as it's yet to get mature.

LLM API Stack

Foundation models space is getting saturated and is waiting to get mature with OpenAI leading the pack in a competitive setup similar to the early years of the AWS cloud. In the current scenario, major cloud providers like AWS view AI as an expansion of their existing cloud computing business. Due to the high computational needs of AI models and ongoing chip shortages, these providers are leveraging their capabilities to develop their own chips or expand data center capacities, thereby introducing their foundational models to compete at both the computational and model layers.

Defensibility ultimately determines long-term value creation

Long-term value creation boils down to the business fundamentals — hard-to-copy technology, network effects embedded in the products, commercial lock-in periods, data lifecycle control, workflow depth, and continuous innovative.

Some potential sources of defensibility for generative AI products in the early stages could work to incumbents’ advantage from the SaaS era. Companies that scaled in the SaaS era, for example, can employ AI to build a natural language user interface on top of advanced workflow capabilities. As a result, incumbents can launch generative AI products that are powerful, even if the underlying capabilities aren’t novel; rather they are simply presented to users in a different way. Additionally, incumbents may have an advantage if they can capitalize on their existing client bases or position themselves to succeed on strong security or compliance reputations.

As AI software increasingly functions as a system of prediction and execution, rather than just a system of record, it will fundamentally transform software workflow and user interface. Because of how quickly we can already see this transformation taking place, agile businesses that can draw in AI expertise and move quickly with some distribution will likely succeed.

Stay tuned for the next one on how to choose LLM models, and are they really solving the product needs of builders?

A big thank you to the experts who helped me with their perspectives-Kumar Saurav from Vodex, and Puneet Lamba from Aspiro

If you found this piece helpful or interesting, please share it with your network.